Friday, December 19, 2008

r = x + y

r = research

x = depth in existing knowledge

y = new knowledge

Waiting until mastering all existing knowledge on a subject before creating new knowledge is probably going to miss the train. Creating new knowledge without mastering existing knowledge is probably going to result in duplicate efforts and to lack depth. The challenge is to find the right balance!

(The equation is extracted from here)

A community index of programming languages

Even if the false positives are minimized, does this index really give a measure of popularity? I think the answer is quite subjective. There may be articles criticizing one language or the other. Some languages could be so easy to use that there is no need for extensive tutorials, references, etc. They may need to take some of the following matrices to device a better index:

-what languages companies use

-tooling and other support (libraries, etc.) available for languages (both commercial and free - should there be a trend based on whether those are commercial or free, it needs to considered as well)

-what current open/closed code bases use and their sizes, and better yet the growth factor

-giving different weights to blog entries, forum messages, normal articles, tutorials, etc.

-making the search outcome time sensitive

-and so on.

Provided that we have an accurate index, how could this be useful? For different stakeholders, it may be good a decision point. For example, if you are company developing software, you may want to make sure that you select mainstream languages with the hope that you won't be short of human resources and you'll have guaranteed support and tooling available. If you're a tool developer, both upward and downward trends could create opportunities for you; with upward trend, you'll have a larger market to target at. If a language shows a downward trend, you may want to analyze if it is due to some gap such as lack of tooling support, and fill the gap.

Monday, November 3, 2008

A Security Market for Lemons

Today, I attended a talk by Dr. Prabhakar Raghavan, the head of Yahoo research. It was quite interesting and a main take away point for me was we, as scientists/engineers, need to seriously consider economic aspects (mainly monetization) along with technical details (correctness, scalability, responsiveness, security, etc.) when designing/developing systems. He nicely explained how Yahoo was loosing money 2 years ago because of not considering other factors (such as click rate) along with bid prices to order the list of ads shown to users. (Companies are charged only when users click on their ads, but not for showing them. Companies can bid high for their ads and have a top spot in the ads bar even though the ads may not be very relevant to users' intent. They continue to enjoy top spot (as user's hardly click on irrelevant links) at the cost of more relevant ones not getting the due attention. However, such top ads gets the unfair attention of users over others as conveying their brand plays a big part of initial selection of products by users. To prevent such an unfair or kind of lemon market for ads, you need to consider other factors in addition to the bid they make.) Now they have corrected this. Here's how Google ranks ads.

Now to the $subject...

I was curious to find out what a lemon market is all about - especially how it affects security. I must admit that my knowledge on economic concepts is very limited. However, I found some good reference about it on the web [1, 2, 3]. Let me try to explain the core:

The concept of lemon markets was introduced by George Akerlof, the joint Nobel price winner in Economics in 2001, in his 1970 paper "The market for lemons: Quality uncertainty and the market mechanisms" (Has about 5000 citations and counting in Google Scholar).

What is a lemon market? How are they get formed?

The basic idea is that in a market (in author's terminology, with asymmetric information) where sellers have more information than buyers about the product (and no buyers can accurately assess the quality of the product), bad products can drive away good products from the market creating a lemon market for lemons (low quality products). This happens because buyers get some kind of incentive (e.g. lower price) in exchange of lemons. You can find the detailed requirements for its formation in the paper.

Akerlof uses used car market as an example to explain this concept. There are good used cars and defective used cars (lemons). Sellers know what is what, but buyers don't know until at least they purchase them. Sparing the technical details mentioned in the paper, buyers position their perceived price for a good car a little over the price of an average used car in the hope that they will trade for a good used car. Since good used cars are priced higher than what buyers may be willing to pay, good used cars do not get sold and the lemons (the crappy ones) take over the market.

I think, the key reason for such formation of lemon markets is the lack of credible disclosure of the quality of the products being sold. If we have a trusted independent party to assert the quality, we may prevent lemon markets. For example, we have kbb here in USA to check prices for used cars; it gives a trust worthy measure of how much a used car actually worth. Even though it has some subjective components in its evaluation, it is better to have something like that than nothing.

Does marketing create lemon markets? I think it contributes towards that; with competition, marketing companies may bend the facts to get a more favorable perception among buyers for certain products. However, it is not quite sure if they will survive in the long run. You can find a detailed treatment of it here. With many major online companies providing customer reviews/ratings for products, I think people become more informed about lemons. When I buy from Amazon or other sites, first thing I do is to go through customer reviews to see their experience with the product - so fat it has been quite reliable way of avoiding lemons.

What are the imprecations of lemon markets on security? I found this interesting post regarding this. In this, Schneier points out some good examples. The bottom line is that there is no market for good security (since mediocre security is cheaper and companies base their decisions mainly on price) unless there is some sort of "signal" (example: warranty, a third-party verification, etc) that informs buyers about differences in the same product in the market. Of course, the "signal" should be trustworthy in order for it to work.

From the lemon paper - the cost of lemon markets:

"The cost of dishonesty, therefore, lies not only in the amount by which the purchaser is cheated; the cost also must include the loss incurred from driving legitimate business out of existence".

Sunday, November 2, 2008

Slideshare: Efficient Filtering in Pub-Sub Systems using BDD

Update: Removed the embedded code from the Slideshare as it brings my blog to its knees. (Slideshare does not seems to scale well and it sucks for large presentations)

Slideshare: Pub-Sub Systems and Confidentiality/Privacy

Update: Removed the embedded code from Slideshare.

Saturday, November 1, 2008

The SQ3R Method

Here's the extract:

The SQ3R method has been a proven way to sharpen study skills. SQ3R stands for Survey, Question, Read, Recite, Review. Take a moment now and write SQ3R down. It is a good slogan to commit to memory in order to carry out an effective study strategy.

Survey - get the best overall picture of what you're going to study BEFORE you study it in any detail. It's like looking at a road map before going on a trip.

If you don't know the territory, studying a map is the best way to begin.

Question - ask questions for learning. The important things to learn are usually answers to questions. Questions should lead to emphasis on the what, why, how, when, who and where of study content. Ask yourself questions as you read or study. As you answer them, you will help to make sense of the material and remember it more easily because the process will make an impression on you. Those things that make impressions are more meaningful, and therefore more easily remembered.

Don't be afraid to write your questions in the margins of textbooks, on lecture notes, or wherever it makes sense.

Read - Reading is NOT running your eyes over a textbook. When you read, read actively. Read to answer questions you have asked yourself or questions the instructor or author has asked. Always be alert to bold or italicized print. The authors intend that this material receive special emphasis. Also, when you read, be sure to read everything, including tables, graphs and illustrations. Often times tables, graphs and illustrations can convey an idea more powerfully than written a text.

Recite - When you recite, you stop reading periodically to recall what you have read. Try to recall main headings, important ideas of concepts presented in bold or italicized type, and what graphs charts or illustrations indicate. Try to develop an overall concept of what you have read in your own words and thoughts. Try to connect things you have just read to things you already know. When you do this periodically, the chances are that you will remember much more and be able to recall material for papers, essays and objective tests.

Review - A review is a survey of what you have covered. It is a review of what you are supposed to accomplish, not what you are going to do. Rereading is an important part of the review process. Reread with the idea that you are measuring what you have gained from the process. During review, it's a good time to go over notes you have taken to help clarify points you may have missed or don't understand.

The best time to review is when you have just finished studying something. Don't wait until just before an examination to begin the review process. Before an examination, do a final review. If you manage your time, the final review can be thought of as a "fine-tuning" of your knowledge of the material. Thousands of high school and college students have followed the SQ3R steps to achieve higher grades with less stress.

Slideshare: A Structure Preserving Approach to Secure XML Documents

The following is from the talk I did at TrustCol 2007 workshop in NY, USA. It is based on our paper "A Structure Preserving Approach to Secure XML Documents". It proposes a new approach to encrypt and sign XML documents without destroying their structure.

Wednesday, October 22, 2008

WSO2 Book Authors

Tuesday, October 21, 2008

Time to cut back by half

math course (MA 153), a first course on Algebra and Trigonometry. (Side Note: I've got to say

that even though he is a Management major, he went to top the class in this course - such was

his dedication to the course!)

Having done all my studies up to bachelor's through the education system in Sri Lanka, I

realize that this freshmen undergrad course here is very much similar to the preliminary material covered in Pure Mathematics (now Combined Mathematics) in Physical Science stream. We spent 3 years for Advanced Level (A/L) examination and then got selected to follow an engineering degree which usually takes another 4 years to complete. In between the two courses (A/L and Bachelor's) , we at least have to idle around for about 1 year. So, if you do the simple

math, it is going to be around 8 years; In USA what they achieve in 4 years, we take 8 years.

It is unfortunate to see that the transition from A/L to bachelor's not a well-planned smooth

one - some materials are repeated and some not used at all; there's little coordination

between two major courses. I think it is high time we combine these two courses together and

graduate young people when they approach 20's or early 20's so that they have ample opportunities both in academia and industry. (We first need to iron out practical issues in making such a fundamental change. Any change is quite difficult to initiate - it's the true nature of human kinds to resist changes, but the choice is ours - do we want to stagnate or progress?)

Thursday, October 9, 2008

CAPTCHA solving/breaking economy

Customer: I want to get xxx number of CAPTCHA's solved.

decaptcher.com: Not a problem. We charge $2 for 1000 CAPTCHA's and minimum deposit is $8.

Customer: Wow, it's only $0.002 for a CAPTCHA!

Wednesday, October 8, 2008

Can you count the number of soldiers..

The answer is 463!

Notice 7, 10 and 13 are relatively prime in pairs. You can apply the Chinese Remainder Theorem! The three congruences x ~ 1 (mod 7), x ~ 3 (mod 10) and x ~8 (mod 13) have common solutions, where x is the exact soldier count. Any two common solutions are congruent modulo 910 (that is 7 * 10 * 13).

Using number theory, we can show that x ~ 463 (mod 910). The solutions are 463, 1373 (463 + 910), 2283 and so on. Since there are less than 1000, the answer is 463.

(Note: I have used '~' as the congurence symbol, but we usually use 'equivalent' notation for that)

(Courtesy: The Art of War by Sun Tsu in the 17th century; adapted from the Wagstaff's Number Theory book)

Isn't it cool..

Here are some more similar challenges:

Eggs in the basket

Five pirates and a monkey

Saturday, September 27, 2008

CS Ranking in USA

Monday, September 1, 2008

PKC - inventors?

Thursday, July 24, 2008

Keeping Track of Your Laptop for Free

Downloads are still not available but they have published some papers on this in some good conferences. My first concern is the privacy; I don't want others to keep tab of where I go! According to the researchers, their solution is privacy preserving. I also have some questions about its usefulness especially when the laptop's been stolen.

This solution works only if the laptop is connected to the Internet and the system is not modified or removed.

How hard it is to remove or stop the service running from the laptop?

How hard it is to spoof location information?

How do we quantify the level of privacy it provides?

Tuesday, July 15, 2008

After Effects of the Recent DNS Patch

According to what I read, DNS was vulnerable to cache poisoning attack which gives an easy passport to malicious attackers to redirect web traffic and emails to their systems and do all kinds of nasty things. The vulnerability is due to lack of entropy in the query ID field together with a lack of source port entropy.

I was more interested in the DNS traffic patterns after the large scale patching and found this diagram which shows the traffic in the proximity of the fix date.

The spikes seem to be large scale DNS attacks. Apparently, we don't see much difference in attack patterns between after and before the patch.

Friday, July 11, 2008

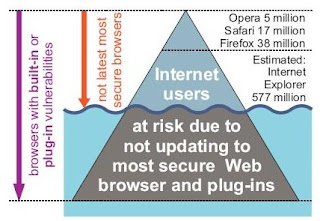

The Browser Insecurity Iceburg

The Web browser Insecurity Iceberg represents the number of Internet users at risk because they don’t use the latest most secure Web browsers and plug-ins to surf the Web. This paper has quantified the visible portion of the Insecurity Iceberg (above the waterline) using passive evaluation techniques - which amounted to more than 600 million users at risk not running the latest most secureWeb browser version in June 2008..

Courtesy: Understanding the Web browser Threat: Examination of vulnerable online Web browser populations and the "insecurity iceburg"

They have used Google's search data (made privacy preserving) to gather these statistics. You'll find other interesting statistics in the paper as well. It's a good idea to upgrade our browsers to the latest versions prevent from being target of browser based attacks.

Doll Test

Thursday, July 10, 2008

Which data model and query language for content management?

As mentioned in the paper, there have been 9 major data model proposals:

Hierarchical (IMS): late 1960’s and 1970’s

Directed graph (CODASYL): 1970’s

Relational: 1970’s and early 1980’s

Entity-Relationship: 1970’s

Extended Relational: 1980’s

Semantic: late 1970’s and 1980’s

Object-oriented: late 1980’s and early 1990’s

Object-relational: late 1980’s and early 1990’s

Semi-structured (XML): late 1990’s to the present

It's a good read up to get a grip on how data models evolved and the lessons learned.

Authors predict that XML will become popular as an 'on-the-wire-format' as well as data movement facilitator (e.g. SOAP) due to its ability to get through firewalls. However, they are pretty pessimistic about XML as a data model in DBMS mainly because of its complex query language (XQuery), complex XMLSchema and its having only a limited real applications (schema later approach for semi-structured data) which cannot be done using OR DBMS's. It seems if you don't KISS ;-) (Keep it Simple and Stupid), you are going to loose.

Tuesday, July 8, 2008

The Greatest Match Ever

Both the players were equally up to the task and you can hardly tell who had the upper hand. However, if you watched the match, you won't disagree with me that Nadal was a little better than Federer on that day. And rightfully Nadal won the title at the end in his 4th championship point. It was a pretty close match - 6-4, 6-4, 6-7 (5-7), 6-7 (7-9 Nadal missed two championship points), 9-7 (What a sporting feast for Spain who recently clinched Euro cup 2008 beating Germany as well). I was fascinated by the masterclass of tennis played; it was unbelievable how they converted aces and winners into shots and difficult-to-hit ones into winners. Nadal also went to record books by being the first player to win back-to-back French Open and Wimbledon after nearly 3 decades. This match also the longest match to the date (without the rain breaks). Both played very professional tennis and I was simply amazed how graciously both handled the pressure where an armature would have screamed or even outcried at a miss. Great Match!

During the rain-interruption I was able to watch (thanks to espn360) the Asia cup final where our team (Sri Lankans) thrashed Indians. I was really happy to see Sanath hitting a winning century in this match and Ajantha Mendis, the new found spinner, was simply unplayable for Indians. Well done Lions!

Tuesday, July 1, 2008

Out of Print (Newspapers)?

"A bundled product is one that combines a number of products the demands for which may be quite different--some consumers may want some of the products in the bundle, other consumers may want other products in the bundle (sports, weather, politics and you name it)...Bundling is efficient if the cost to the consumer of the bundled products that he doesn't want is less than the cost saving from bundling..." The key take away point is that bundled product may be cheaper than the total price of the individual unbundled component.

It is disputable if bundling or specialization the way to go in online media. I put my money on specialization as a start-up option mainly due to the following two reasons.

1. There are much more competitors in the online market than print media.

2. It's easier to aggregate views from many different sources and provide a through view of the news at hand.

As the business grows, bundling may be considered to attract more audience.

Further, he indicates that older generation prefer to read hard copies compared to the younger generation. While this may be true for the current demographic spread (may be due to print media being the dominant source when they were young), I think this trend will also change in the future; when people get used to online news when they were young, they'll probably continue to read online (resistance to change factor comes to play).

Few years ago (when I was in Sri Lanka), every Sunday morning I used to read Lakbima, Sunday Times and/or Sunday Observer (Sri Lankan news papers), but here in USA, I prefer to read news online. I'm not alone, these sources [1, 2] back up the fact that more and more people get information online, shrinking the market for the printed newspapers. I think, among other things, following contributed to this trend.

1. It's much cheaper to circulate information online.

2. Most printed newspapers rely on ads to generate revenue. With the advent of online advertising including free (like craiglist) services, their revenue through ads started to diminish, propelling them to look into alternatives.

I am more interested in the technical challenges in realizing the above trend. A part of my research is directed towards efficient and secure content distribution. Looking at the trend I think my research can make a positive impact in the future in this area; I always want to do something that is useful to people. Who knows, in the near future, you'll see many people reading their morning news through hand held wireless readers!

Tuesday, June 24, 2008

The Security Mindset

"Security requires a particular mindset. Security professionals -- at least the good ones -- see the world differently. They can't walk into a store without noticing how they might shoplift. They can't use a computer without wondering about the security vulnerabilities. They can't vote without trying to figure out how to vote twice. They just can't help it.

...

This kind of thinking is not natural for most people. It's not natural for engineers. Good engineering involves thinking about how things can be made to work; the security mindset involves thinking about how things can be made to fail. It involves thinking like an attacker, an adversary or a criminal. You don't have to exploit the vulnerabilities you find, but if you don't see the world that way, you'll never notice most security problems..."

The biggest question is how we prove that the block of code we have written is correct (no security holes what so ever)? Our proof of correctness depends on our curiosity about what might possibly go wrong - have we considered all remote and corner cases? It's more or less analogous to theorem proving in Mathematics; we say a theorem is correct only if we can prove it to be correct for all cases.

I agree with him that the lack of security mindset is a major cause for many security problems we experience today; looking at things from an attacker's perspective and analytical (Math) skills can certainly help!

Monday, June 23, 2008

Is XML worth it?

One of the criticism is that XML is slow to parse compared to flat files such as CSV files. This fact about the speed is true. However, the comparison is quite flawed as it's like comparing apples with oranges. XML provides a parsable definition of a document structure while CSV's or similar approaches do not. We need to realize that the benefit comes at an extra cost!

In XML-sucks web sites, they deliver the message that XML is hard to deal with in programming paradigm. IMO, this fact was true during the early days when XML was invented about a decade ago where there were only a few libraries and tools to work with. Now, the situation has changed; there are many libraries (both DOM and SAX) for various languages which are not that hard to master once you spend some to learn the basics.

Another criticism is that XML is verbose (use more bandwidth than required to do the job). With the multimedia age, I don't think this addition of markups going to create a huge difference in the bandwidth usage (provided that we judiciously use namespaces). Further, there are compression techniques on XML already available which help reduce the message size. If you are really really concerned about the message sizes and the interoperability or any other cool things about XML are not required, I suggest you don't use XML. In my opinion, the gain we get is well worth the price we pay for the additional

bandwidth, which is after all textual information.

I agree that while XML itself is quite simple, it gets complicated with the proliferation of related technologies. However, complexity is partly under our control as we decide what we want to use along with XML. The trick is to use only those technologies that are absolutely necessary and have matured to become standards.

XML is for hierarchically structured data and can be an overkill for data with no hierarchy. For example, if a property-value file (like in Java) is sufficient for the job, you will not gain anything by using XML but loose for performance. XML-suck groups compare the data of the latter type in XML with other alternatives available and gives a false impression. If your data is not hierarchically structured and do not have a requirement to use a specific document format, I would go for a flat file format.

Some of the cool features of XML that makes it stand out from other approaches:

Addresses the internationalization (i18n for short) issue

If you don't know what encoding the data is in, the data is virtually useless. With the global economy becoming one single market, internationalization (which allows to adapt to various languages and regions without engineering changes) of data becomes necessary. So, the encoding becomes an import factor. An XML document knows what encoding it's in. There's no ambiguity in an XML document which is a sequence of characters and these characters are coded and then encoded in a specific character encoding. The encoding used decides the number of bytes required for the coded characters (for example UTF-8 requires 1 byte). No matter what software you use, it can process any XML document making it ideal for data exchange.

Enforces creating well-formed documents

XML syntax enforces to have properly nested documents which allows you to verify the document is structurely correct to a certain degree.

Can enforce to create valid documents

In addition to proper nesting, you may need to make sure the document includes only certain elements and attributes, their data types, etc. These documents are declared to meet a DTD or XML Schema (successor of DTD). The nice thing about XML Schema is it itself is written in XML and there are a whole bunch of validation tools out there to validate the XML document against the schema.

Promotes syntax-level interoperability

In the software industry, interoperability has been one of the hardest goals to achieve. People initially thought that having well defined API's is the way to go. However, this approach does not work across heterogeneous operating systems. If you look at the success of the Internet and its related technologies, one thing is common; they all have bits-on-the-wire definitions (i.e. syntactic level).

A safe way of keeping data long term

As we all know, information/data outlives technologies we have today. Therefore, keeping data in proprietary formats may not be a good choice especially if the data are anticipated to be used much later down the time line. XML solves this problem as it's an open specification and is not tied to any specific technology.

To sum up, if you use XML only for those tasks for which it is designed to be used, it can pay you off; XML is not a solution to every data problem we encounter.

Main References:

Why XML Doesn't Suck

Tuesday, June 3, 2008

For a Good Cause

Monday, June 2, 2008

Ubuntu + PHP + MySQL

sudo apt-get install apache2

sudo apt-get install mysql-server libmysqlclient15-dev

sudo apt-get install php5 php5-common libapache2-mod-php5 php5-gd php5-dev

You can set a root password for MySQL server with the following command (initially the root password is blank).

sudo /etc/init.d/mysql reset-password

Now php should work along with apache2. However, we still need to get it glued with MySQL. Installing phpmyadmin does the trick.

sudo apt-get install phpmyadmin

For PHP to work with MySQL, we need the php5-mysql package. Installing above phpmyadmin package automatically installs php5-mysql for you. Now you should see a section for MySQL in phpinfo(). If you go to http://localhost, you will see a folder called phpmyadmin which provides a web interface to your database server.

If you happen to forget the root password of your MySQL (which happened to me), you can do the following to reset.

First stop the already running MySQL server:

mysql server /etc/init.d/mysql stop

Start MySQL in safe mode with privilege checking switched off:

mysqld_safe --skip-grant-tables

Log into MySQL as root user:

mysql --user=root mysql

Execute the following:

update user set Password=PASSWORD('new-password');

flush privileges;

exit;

Now kill the MySQL daemon started in safe mode and you are all set to work in the regular mode.

Monday, May 12, 2008

University of Moratuwa, Sri Lanka, on top of the GSoC list

Getting started is the hardest part of anything. If we look at the last 3 years, I recognize one person, Dr. Sanjiva Weerawarana, who, I am sure, would be happier than anyone else to see these current figures. Dr. Weerawarana has been encouraging and helping university students through LSF and WSO2, as well as being a part-time lecturer to participate in open source projects including GSoC. We should also recognize the effort by the faculty and the heads of the departments (including my undergrad department) of University of Moratuwa to drive students to obtain real world experience through practical open source projects.

Go Mora!

Saturday, March 29, 2008

Google Error #102

Thursday, February 28, 2008

WSDL to PHP Classes

Tuesday, February 19, 2008

Format war is over

What is the situation of the people who bought HD DVD devices? Apparently there are over 1m users over worldwide.

Now that the battle is over, at least the new customers don't need to worry about which format to go with. With no more competition, can we expect the prices to go down? This move may bring the prices of Blu-ray players and devices down due to additional profit from patent and mass volume sale.

Sunday, February 17, 2008

Grand Challenges for Engineering/Sciences

The list:

-Advance health informatics

-Engineer better medicines

-Make solar energy economical

-Provide access to clean water

-Reverse-engineer the brain

-Advance personalized learning

-Engineer the tools of scientific discovery

-Manage the nitrogen cycle (What this basically means is to find ways to reproduce nitrogen to maintain the bio-geochemical cycle which is being altered by increased use of fertilizers and industrial activities. Reduction in nitrogen in air leads to, among other things, smog, acid rain, polluted drinking water and global warming.

-Provide energy from fusion

-Secure cyberspace (identity thefts, viruses, etc.)

-Develop carbon sequestration methods (methods to capture carbon dioxide produced from vehicles and factories from burning fossil fuels, a major culprit of global warming)

-Enhance virtual reality

-Prevent nuclear terror ( I think this is more political than technological. We already have the technology in place; it's a matter of how we use it only for purposes that benefit humankind.)

-Restore and improve urban infrastructure

There's a good introduction about this in their website.

I personally think the following are especially important (from a technological point of view but not political):

-"Economical" clean energy sources to substitute for fossil fuels (fast diminishing)

-Methods to remove carbon dioxide and cycle nitrogen to control global warming

-Methods to prevent, detect, recover from, cyber attacks. With more and more people/businesses do their financial transactions online, we need effective methods to protect them from malicious attackers.

Saturday, February 16, 2008

Purdue Fulbright Association

To prevent spams, I use a simple request-response protocol in this site instead of using CAPTCHA as I don't see strong security requirements for this web site. I also have developed a (stronger) purely server-side approach which uses AJAX to make things efficient (prevent complete page reloading when talking with the server). I will text about it in a future blog when I get some free time so that it might be helpful for those looking for such solutions.

Saturday, February 9, 2008

Secret Sharing

The basic idea behind Shamir's (t, n) threshold scheme (t <= n) based on Lagrange interpolating polynomials is to split the secret among n people and you need at least t people to construct the key. (Example: 5 people have formed a business and you don't want to allow access to company bank account if majority does not agree. You define ceiling(5/2) <= t <= 5).

How it works: t points in Cartesian coordinate (x1, y1), ..., (xt, yt) uniquely identifies a polynomial of degree <= t-1 in that space. That the Lagrange polynomial. You define a polynomial f(x) = a0 + a_1.x + .. + a_{t-1}x^{t-1} where a0 represent the secret you want to split among n people. You randomly choose n points that satisfies f(x) and give those to each person. If you have at least t points, you can use the Lagrange polynomial to determine the unique polynomial corresponding to those points. The constant gives you the secret value. In practice, we usually take the modulus of f(x) (i.e. f(x) mod p) to bound the points without revealing no more information than it already reveals. There has been numerous extensions to the above idea in the literature some of which are available in the page I mentioned above.

Sunday, February 3, 2008

Diamond for whom?

(Update: And the most brutal terrorist group (some information in this wiki page may not be fully consistent as I can see a lot of undoing going on. As a side node, wikipedia should come up with a way to detect suspicious modifications and maintain the authenticity) marked the day with a series of suicide bomb attacks[1, 2, 3] killing innocent civilians)

Saturday, February 2, 2008

Rest in 10 Minutes

REST stands for REpresentational State Transfer. The key idea behind REST is resources. Everything in the networked world is a resource.

Each resource can be uniquely identified by a URI (E.g: URL's in WWW). WWW is a good example of RESTful design.

These resources can be linked together (E.g: Hyperlinking in WWW).

There's a standardized protocol for client to interact with the resources. (E.g.: Web browsers using HTTP's standard methods such as GET, POST, PUT and DELETE).

It allows you to specify the format in which you want to interact with. (E.g: HTTP Accept headers such text/html, image/jpeg etc.)

It's a stateless protocol. (E.g: HTTP is stateless)

I will now walk you through a simple PHP code snippet to invoke REST services using GET method.

The idea is as follows.

You get the base URL of the resource you want to access. (E.g.: The base URL of Yahoo Traffic REST service is

http://local.yahooapis.com/MapsService/V1/trafficData).You URL encode the parameters expected by the service to indicate your intension. (baseurl?name1=value1&...&namen=valuen)

You get the result back, usually in XML format which you can manipulate easily.

A word about implementations. There are SOAP libraries (e.g.: WSF/PHP) out there which allows you invoke restful services. You can also access such services with those libraries already available. Today, I will take the latter approach and will look into the former approach in a future blog entry.

There are mainly two ways to implement REST clients with built-in PHP libraries.

1. Using cURL

2. Using file_get_contents()

I used the first approach.

Here's how you'd access some the services provided by Yahoo.

Yahoo Locations:

include_once('RESTClient.php');

$base = 'http://local.yahooapis.com/MapsService/V1/geocode';

$params = array( 'appid' => "YahooDemo",

'street' => '271 S River Road',

'city' => 'West Lafayette',

'state' => 'IN',

);

$client = new RESTClient();

$res = $client->request($base, $params);

// Output the XML

echo htmlspecialchars($res, ENT_QUOTES);

$xml = new SimpleXMLElement($res);

echo "

Latitude : ".$xml->Result->Latitude."

";

echo "Longitude : ". $xml->Result->Longitude."

";

echo "Address : ". $xml->Result->Address." ".$xml->Result->City." ".$xml->Result->State." ".

$xml->Result->Zip." ".$xml->Result->Country;?>

Output:

Latitude : 40.419785

Longitude : -86.905912

Address : 271 S River Rd West Lafayette IN 47906-3608 US

Yahoo Traffic:

include_once('RESTClient.php');

$base2 = 'http://local.yahooapis.com/MapsService/V1/trafficData';

$params2 = array( 'appid' => "YahooDemo",

'street' => '701 First Street',

'city' => 'Sunnyvale',

'state' => 'CA',

);

$res2 = $client->request($base2, $params2);

// Output the XML

echo htmlspecialchars($res2, ENT_QUOTES);

?>

Output:

[XML omitted]

Severity[1-5, 5 being the most] : 2

Description : NORTHBOUNDLONGTERM FULL RAMP CLOSURE CONSTRUCTION

include_once('RESTClient.php');

$base3 = 'http://local.yahooapis.com/MapsService/V1/mapImage';

$params3 = array( 'appid' => "YahooDemo",

'street' => '271 S River Road',

'city' => 'West Lafayette',

'state' => 'IN',

);

$res3 = $client->request($base3, $params3);

$xml3 = new SimpleXMLElement($res3);

echo " .$xml3."'/>

.$xml3."'/>

";

?>

As you might have notices I have included 'RESTClient.php' above. Here's the code for the RESTClient class.

//Curl based REST client for GET requests

class RESTClient {

//request takes two parameters

//$baseUrl - base URL of the resource you want to access

//$param - an associative array of parameters you want to pass with the request

public function request($baseUrl, $params) {

$queryString = '';

$responseXml = null;

$response;

$resourceUrl;

foreach ($params as $key => $value) {

$queryString .= "$key=" . urlencode($value) . "&";

}

$resourceUrl = "$baseUrl?$queryString";

// Initialize the session giving the resource URL

$session = curl_init($resourceUrl);

// Set curl options

curl_setopt($session, CURLOPT_HEADER, true);

curl_setopt($session, CURLOPT_RETURNTRANSFER, true);

// Make the request

$response = curl_exec($session);

// Close the curl session

curl_close($session);

// Get HTTP Status code from the response

$statusCode = array();

preg_match('/\d\d\d/', $response, $statusCode);

// Check the HTTP Status code

switch( $statusCode[0] ) {

case 200:

break; //OK

case 503:

echo('Failed: 503 : Service Unavailable.');

break;

case 403:

echo('Failed: 403 : Access Forbidden.');

break;

case 400:

echo('Failed: 400 : Bad request.');

break;

default:

echo('Unhandled Status:' . $statusCode[0]);

}

if ( $statusCode[0] == 200) {

$responseXml = strstr($response, '); //stripping off HTTP headers

}

return $responseXml;

}

}

?>

Friday, February 1, 2008

Is Lighter Better?

It's a good read up on "colorism" and to get to know about people's experiences with skin-tone.

When to/not to Use Web Services

When to use Web Services:

-To integrate heterogeneous systems (different platforms, different languages)

-when you don't know about client environment in advance (For example, Web Services API's provided by amazon.com, numerous mash-up servers, etc.)

-When you want to have the same data in different presentation (This is due to the fact that messaging is purely based on XML and XML can easily be converted to different document formats)

- To make web site content available as a services (Think of as a replacement to RSS feeds)

- To provide different entry points to the same data repository. (For example, data services provide an abstraction for database access)

-To interface legacy systems

- To build B2B electronic procurement systems

- To expose a software as a service

- To reuse existing components; Unlike traditional middle-ware technologies which are essentially using a component-based model of application development, Web services allow almost zero-code deployment.

When not to use Web Services :

-It is not the most efficient (in terms of response time) way to transfer data compared to RPC, CORBA, etc which use native binary messages. Until we find efficient ways to handle XML, web services may not be used for systems where there are stringent real-time timing requirements.

-Message size is lager than that of its predecessors (of course it depends on the encoding used and standardize text added to the message also contributes to this). It may not be suitable for high data volume systems.

Monday, January 28, 2008

Go Purdue!

Sunday, January 27, 2008

8 Design Principles of Information Protection [Part 2]

5. Separation of Privilege

We break up a single privilege among multiple components or people such that there should be a collective agreement between those components or people to perform the task controlled by the privilege. Multi-factor authentication is a good example for this; for example, in addition to some biometric authentication (what you are) such as finger print, iris, etc. the system may require you to provide an ID (what you have) to gain access to it. A good day-to-day example is a multiple signature check. As a side note, operating systems like OpenBSD implemented separation of privilege in order to step up security of the system.

Secure multi-party computations in cryptography (first introduced by Andrew C. Yao in his 1982 paper; the millionaire problem) is related to this concept in that without the participation of all the participants you cannot perform the required computation; this scheme has the added benefit that no participant learn more about the secrets that other participants have.

Secure secret sharing (it is different from shared secret like symmetric key) in cryptography is another good example. There has been many secret sharing scheme since Adi Shamir and George Blakley independently invented in 1979. The basic idea is that you distributed portions of a secret to n people in the group and you need the input of at least t (<= n) people in order to construct the secret.

In programming terms, it's simply logical && condition:

if (condition1 && condition2..&& conditionn) { //perform }

6. Least Privilege:

Some people confuse this with 5, but this is very different from separation of privilege principle. This simply says that every program and every user should only be granted minimum amount of privilege they require to carry out the tasks. The rationale behind this is it will limit the damage caused to a system in case there's an error. For example, if a reader is only allowed to read files, it would be violating this principle to give both read and write access to those files.

All the access control models/MLS/MAC/DAC/RBAC out there (For example, Biba integrity model 1977, Bell-LaPadula model 1983 and Clark-Wilson integrity model 1987 etc.) try to archive this security principle.

I find some similarity between breaking a program into small pieces and this principle; this allows you call only the required tiny functions to get the work done without invoking unnecessary instructions.

7. Least Common Mechanism

The key idea behind this is to minimize shared resources such as files and variables. Shared resources are a potential information path which could compromise security. Sharing resources is a key aspect of Web 2.0; while we achieve perfect security with isolation, we gain little benefit out of it's intended use. So, we need to share information while preventing unintended information paths/leakages.

You can think of DoS attacks as a result of sharing your resources over the Internet/WAN/LAN with malicious parties. While we cannot isolate the web resources, there are already many mechanisms including using proxies, to restrict such malicious uses and promote only the intended use.

In programming terms, you're better off using local variables as much as possible instead of global variables; it is not only easier to maintain but also less likely to be hacked.

8. Psychological Acceptability

You cannot make a system secure at the cost of making it difficult to use! Ease of use is a key factor in whatever system we build. We see a lot of security mechanisms from policy enforcements to access control, but little they focus on the usability aspects. Further, it is more like to make mistakes if the system is intuitive to use.

If you are designing API's, make sure you think about the user first!

Saturday, January 26, 2008

As You May Think

Having considered the plight of a researcher deluged with inaccessible information, he proposed the memex, a machine to rapidly access, and allow random links between, pieces of information. The idea was to link books and films and automatically follow cross-references from one work to another. Doesn't it sound familiar with what Tim Berners-Lee invented in 1989? Although the way how Bush viewed hyper-links quite different from what we have today, I am sure it must have surely inspired the concept of WWW and hyper-links. He went on to talk about sharing information with colleagues and wide publication of information; the whole point of web 2.0. (His ideas on how to achieve his thoughts may not be perfect considering the technological advances we had since he put forward these ideas. However, we must admit that his thinking was something similar to many famous speculations in science and technology from computation to communication).

Here's the link to the complete article published in Atlantic Monthly.

A man/woman on mars?

http://www.space.com/scienceastronomy/080124-bad-mystery-mars.html

Sunday, January 13, 2008

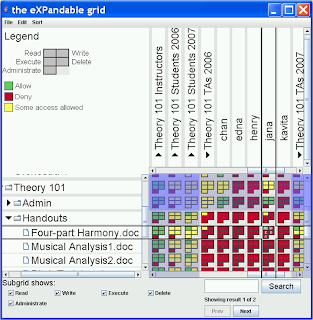

Expandable Grids for Visusalizing and Authoring Security/Privacy Policies

Getting back to the problem they were trying to solve, all existing policy representations are basically one-dimensional (take windows file permissions and natural language privacy policies for example) and this may be a potential usability problem. Their solution was to introduce two-dimensional expandable grids to represent the same. The surveys they had conducted showed mixed results.

Here are some of the examples he demonstrated:

An application for authoring Windows XP permissions.

Rows represent files (expanding folders) and columns users (expanding user groups). A cell represents the kind of permissions a user has on a file, a user has on a folder, a user group has on a file or a user group has on a folder (4 possibilities). Their surveys conducted on the above vs. Windows XP permissions representation have shown positive results.

Another example was to represent P3P policies. Usually, web sites specify P3P policies in natural language (English) which is quite difficult to follow. Here's a snapshot of their approach.

Their preliminary online surveys of comparing the usability of this approach vs. sequential natural language approach have not produced promising results; their initial work was as bad as the natural language approach or worse. Some of the reasons, including the ones that authors have suggested, may have contributed to this:

Their preliminary online surveys of comparing the usability of this approach vs. sequential natural language approach have not produced promising results; their initial work was as bad as the natural language approach or worse. Some of the reasons, including the ones that authors have suggested, may have contributed to this:1. A lot of information has been condensed into a short space

2. Introduction of short terms for descriptions in natural language may not be intuitive to users who are that familiar with these types of policies. (Loss of information during conversion)

3. A user need to remember many types of possible symbols that can be applied to policies which are not standardized notations; users simply don't want to go that extra mile to memorize those proprietary symbols just to protect privacy. A standardized set of possible symbols may do some justification.

4. Also the symbols used are quite similar to one another and does not reflect the operations they are supposed to represent.

5. The color scheme used is not appealing to me either.

(From a user's point of view, I hope the above information is useful to come up with a better user interface for the grid if someone in the group happens to read my blog!)

I also have some issues related to scalability and other things:

How does their grid scale when there are many X and Y values?

What can be done to improve the grid when it is sparse?

Can be loaded incrementally to improve performance?

How do we represent related policies?

How do we systematically convert one-dimensional representation into a grid without loss of information?

Although their initial work has mixed success, I think that with improvements there can be a positive impact on not only in OS permissions, policy authoring but also other domains such as representing business rules, firewall rules, web services policies, etc.

Saturday, January 12, 2008

8 Design Principles of Information Protection [Part 1]

1. Economy of Mechanism - Keep the design as simple and small as possible. When it is small, we can have more confident that it meets security requirements by various inspection techniques. Further, when your design is simple, the number of policies in your system becomes small, hence less complicated to manage (only a few policy inconsistencies will you have) and use them. Complexity of policies is a major drawback in current systems including SELinux. Coming up with policy mechanisms that are simple yet cover all aspects is a current research topic.

2. Fail-safe Default - Base access decisions on permission rather than exclusion. A design mistake based on explicit permission will deny access to the subject when it is allowed. This is a safe situation and can easily be spotted and corrected. On the other hand, a design mistake based on explicit exclusion, will allow access to the subject when it is not allowed. This is an unsafe situation and may go unnoticed compromising security requirements. Putting it in pseudo code:

correct: if (condition) { allow } else { deny }

incorrect: if (!condition) { deny } else { allow }

The bottom line is this approach limits, if not eliminate, unauthorized use/access.

3. Complete mediation - Check for permission for all objects a subject wants to access. For example, if a subject tries to open a file, the system should check if the subject has read permission on the file. Due to performance reasons, Linux does not completely follow this rule. When you read/write a file, permissions are checked the first time and they are not checked thereafter. SELinux introduces enforcing complete mediation principle based on policy configurations, which is a strength of it. However, the policy configuration in SELinux is somewhat complex moving us away from the first design principle, the Economy of Mechanisms.

In programming terms, we should validate the input arguments before doing any processing with them.

fun(args) { if (args not valid) { reject } else { process request } }

4. Open Design - We cannot achieve security by obscurity! This is where the strength of open source shines. An open design allows to have wide-spread public review/scrutiny of the system allowing users to evaluate the adequacy of the security mechanism. While the mechanism is public, we depend on the secrecy of a few changeable parameters like passwords and keys. Take an encryption function. Algorithm is there for you to take, keys are the only secrets.

From a programming perspective, never hard-code any secrets in to your code!

I will text about the last 4 principles in my next blog entry.

5. Separation of Privileges

6. Least Privilege

7. Least Common Mechanism

8. Psychological Acceptability